Timothy Morano

Jul 30, 2024 06:37

Hugging Face and NVIDIA collaborate to supply Inference-as-a-Service, enhancing AI mannequin effectivity and accessibility for builders.

Hugging Face, a number one AI group platform, is now providing builders Inference-as-a-Service powered by NVIDIA’s NIM microservices, in line with NVIDIA Weblog. The service goals to spice up token effectivity by as much as 5 instances with widespread AI fashions and supply instant entry to NVIDIA DGX Cloud.

Enhanced AI Mannequin Effectivity

This new service, introduced on the SIGGRAPH convention, permits builders to quickly deploy main massive language fashions, together with the Llama 3 household and Mistral AI fashions. These fashions are optimized utilizing NVIDIA NIM microservices working on NVIDIA DGX Cloud.

Builders can prototype with open-source AI fashions hosted on the Hugging Face Hub and deploy them in manufacturing seamlessly. Enterprise Hub customers can leverage serverless inference for elevated flexibility, minimal infrastructure overhead, and optimized efficiency.

Streamlined AI Growth

The Inference-as-a-Service enhances the present Practice on DGX Cloud service, which is already obtainable on Hugging Face. This integration offers builders with a centralized hub to check numerous open-source fashions, experiment, take a look at, and deploy cutting-edge fashions on NVIDIA-accelerated infrastructure.

The instruments are simply accessible by the “Practice” and “Deploy” drop-down menus on Hugging Face mannequin playing cards, enabling customers to get began with just some clicks.

NVIDIA NIM Microservices

NVIDIA NIM is a set of AI microservices, together with NVIDIA AI basis fashions and open-source group fashions, optimized for inference utilizing industry-standard APIs. NIM affords larger effectivity in processing tokens, enhancing the effectivity of the underlying NVIDIA DGX Cloud infrastructure and rising the velocity of essential AI purposes.

For instance, the 70-billion-parameter model of Llama 3 delivers as much as 5x larger throughput when accessed as a NIM in comparison with off-the-shelf deployment on NVIDIA H100 Tensor Core GPU-powered techniques.

Accessible AI Acceleration

The NVIDIA DGX Cloud platform is purpose-built for generative AI, providing builders quick access to dependable accelerated computing infrastructure. This platform helps each step of AI improvement, from prototype to manufacturing, with out requiring long-term AI infrastructure commitments.

Hugging Face’s Inference-as-a-Service on NVIDIA DGX Cloud, powered by NIM microservices, affords quick access to compute assets optimized for AI deployment. This allows customers to experiment with the newest AI fashions in an enterprise-grade atmosphere.

Extra Bulletins at SIGGRAPH

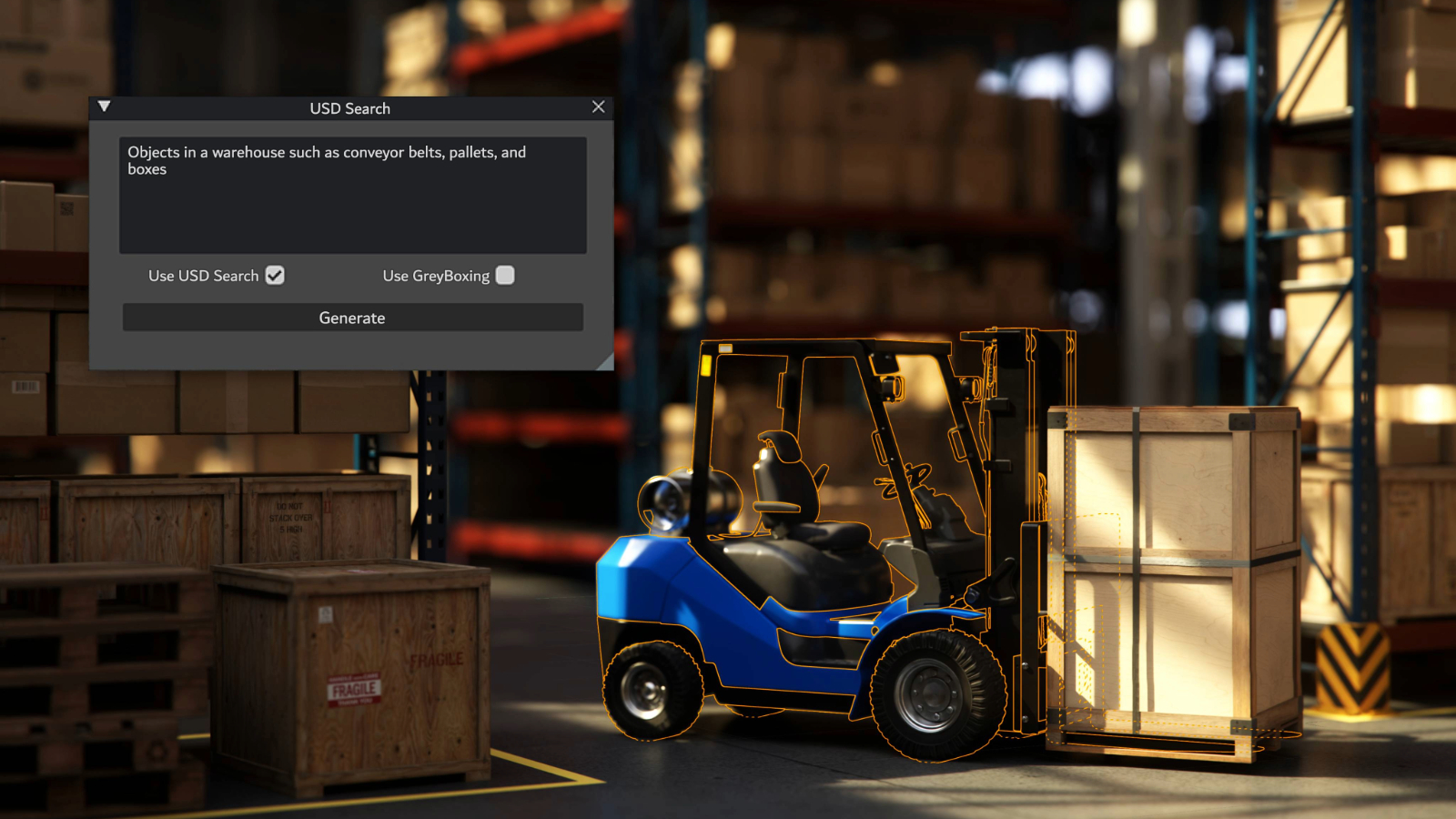

On the SIGGRAPH convention, NVIDIA additionally launched generative AI fashions and NIM microservices for the OpenUSD framework. This goals to speed up builders’ talents to construct extremely correct digital worlds for the following evolution of AI.

For extra data, go to the official NVIDIA Weblog.

Picture supply: Shutterstock