A brand new approach may put AI fashions on a strict vitality weight loss program, doubtlessly reducing energy consumption by as much as 95% with out compromising high quality.

Researchers at BitEnergy AI, Inc. have developed Linear-Complexity Multiplication (L-Mul), a technique that replaces energy-intensive floating-point multiplications with less complicated integer additions in AI computations.

For these unfamiliar with the time period, floating-point is a mathematical shorthand that permits computer systems to deal with very giant and really small numbers effectively by adjusting the position of the decimal level. You may consider it like scientific notation, in binary. They’re important for a lot of calculations in AI fashions, however they require a variety of vitality and computing energy. The larger the quantity, the higher the mannequin is—and the extra computing energy it requires. Fp32 is mostly a full precision mannequin, with builders decreasing precision to fp16, fp8, and even fp4, so their fashions can run on native {hardware}.

AI’s voracious urge for food for electrical energy has develop into a rising concern. ChatGPT alone gobbles up 564 MWh every day—sufficient to energy 18,000 American houses. The general AI business is anticipated to eat 85-134 TWh yearly by 2027, roughly the identical as Bitcoin mining operations, in line with estimations shared by the Cambridge Centre for Different Finance.

L-Mul tackles the AI vitality drawback head-on by reimagining how AI fashions deal with calculations. As a substitute of advanced floating-point multiplications, L-Mul approximates these operations utilizing integer additions. So, for instance, as an alternative of multiplying 123.45 by 67.89, L-Mul breaks it down into smaller, simpler steps utilizing addition. This makes the calculations sooner and makes use of much less vitality, whereas nonetheless sustaining accuracy.

The outcomes appear promising. “Making use of the L-Mul operation in tensor processing {hardware} can doubtlessly cut back 95% vitality value by component clever floating level tensor multiplications and 80% vitality value of dot merchandise,” the researchers declare. With out getting overly sophisticated, what meaning is just this: If a mannequin used this system, it will require 95% much less vitality to suppose, and 80% much less vitality to give you new concepts, in line with this analysis.

The algorithm’s impression extends past vitality financial savings. L-Mul outperforms present 8-bit requirements in some instances, reaching larger precision whereas utilizing considerably much less bit-level computation. Checks throughout pure language processing, imaginative and prescient duties, and symbolic reasoning confirmed a median efficiency drop of simply 0.07%—a negligible tradeoff for the potential vitality financial savings.

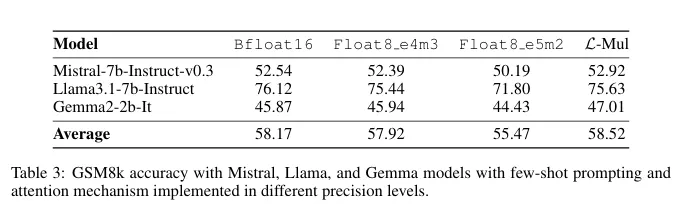

Transformer-based fashions, the spine of huge language fashions like GPT, may gain advantage tremendously from L-Mul. The algorithm seamlessly integrates into the eye mechanism, a computationally intensive a part of these fashions. Checks on in style fashions reminiscent of Llama, Mistral, and Gemma even revealed some accuracy acquire on sure imaginative and prescient duties.

At an operational degree, L-Mul’s benefits develop into even clearer. The analysis exhibits that multiplying two float8 numbers (the best way AI fashions would function at this time) requires 325 operations, whereas L-Mul makes use of solely 157—lower than half. “To summarize the error and complexity evaluation, L-Mul is each extra environment friendly and extra correct than fp8 multiplication,” the examine concludes.

However nothing is ideal and this system has a serious achilles heel: It requires a particular sort of {hardware}, so the present {hardware} is not optimized to take full benefit of it.

Plans for specialised {hardware} that natively helps L-Mul calculations could also be already in movement. “To unlock the total potential of our proposed methodology, we’ll implement the L-Mul and L-Matmul kernel algorithms on {hardware} degree and develop programming APIs for high-level mannequin design,” the researchers say. This might doubtlessly result in a brand new era of AI fashions which are quick, correct, and tremendous low cost—making energy-efficient AI an actual chance.

Typically Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.