Should you’re not a developer, then why on this planet would you wish to run an open-source AI mannequin on your own home pc?

It seems there are a variety of fine causes. And with free, open-source fashions getting higher than ever—and easy to make use of, with minimal {hardware} necessities—now is a good time to present it a shot.

Listed here are a number of the explanation why open-source fashions are higher than paying $20 a month to ChatGPT, Perplexity, or Google:

It’s free. No subscription charges.

Your information stays in your machine.

It really works offline, no web required.

You may prepare and customise your mannequin for particular use instances, similar to inventive writing or… nicely, something.

The barrier to entry has collapsed. Now there are specialised packages that permit customers experiment with AI with out all the effort of putting in libraries, dependencies, and plugins independently. Nearly anybody with a reasonably current pc can do it: A mid-range laptop computer or desktop with 8GB of video reminiscence can run surprisingly succesful fashions, and a few fashions run on 6GB and even 4GB of VRAM. And for Apple, any M-series chip (from the previous couple of years) will have the ability to run optimized fashions.

The software program is free, the setup takes minutes, and probably the most intimidating step—selecting which device to make use of—comes all the way down to a easy query: Do you like clicking buttons or typing instructions?

LM Studio vs. Ollama

Two platforms dominate the native AI area, they usually strategy the issue from reverse angles.

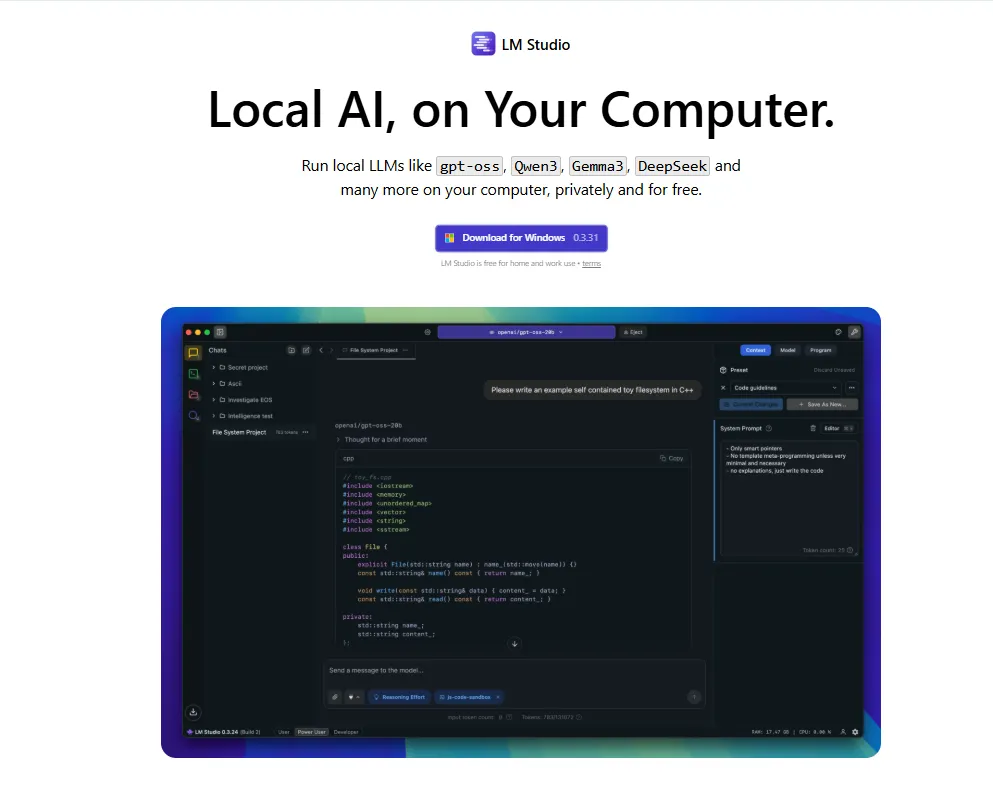

LM Studio wraps the whole lot in a sophisticated graphical interface. You may merely obtain the app, browse a built-in mannequin library, click on to put in, and begin chatting. The expertise mirrors utilizing ChatGPT, besides the processing occurs in your {hardware}. Home windows, Mac, and Linux customers get the identical clean expertise. For newcomers, that is the plain start line.

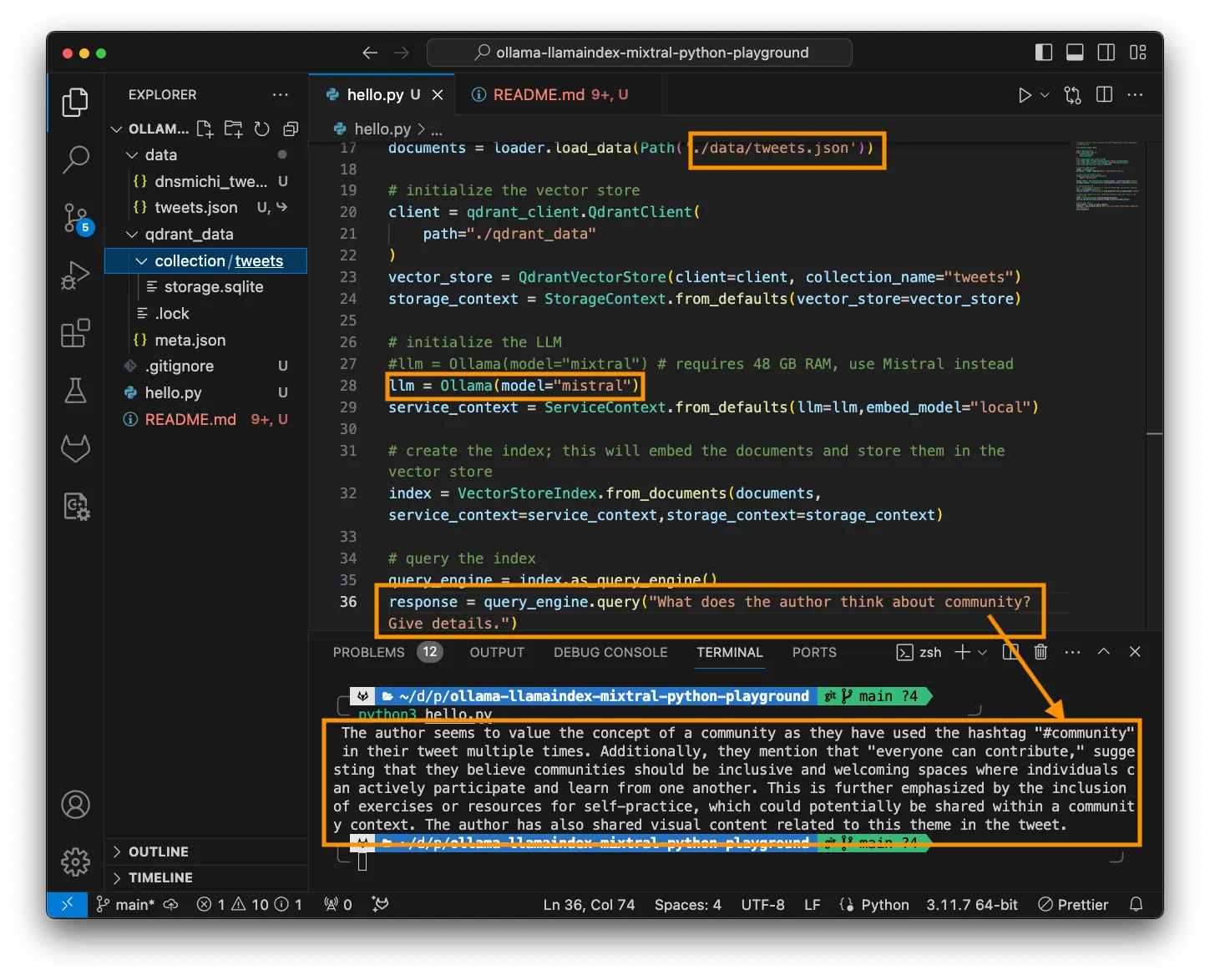

Ollama is geared toward builders and energy customers who reside within the terminal. Set up through command line, pull fashions with a single command, after which script or automate to your coronary heart’s content material. It is light-weight, quick, and integrates cleanly into programming workflows.

The educational curve is steeper, however the payoff is flexibility. It is usually what energy customers select for versatility and customizability.

Each instruments run the identical underlying fashions utilizing similar optimization engines. Efficiency variations are negligible.

Organising LM Studio

Go to https://lmstudio.ai/ and obtain the installer to your working system. The file weighs about 540MB. Run the installer and comply with the prompts. Launch the appliance.

Trace 1: If it asks you which kind of consumer you might be, choose “developer.” The opposite profiles merely conceal choices to make issues simpler.

Trace 2: It is going to advocate downloading OSS, OpenAI’s open-source AI mannequin. As an alternative, click on “skip” for now; there are higher, smaller fashions that may do a greater job.

VRAM: The important thing to operating native AI

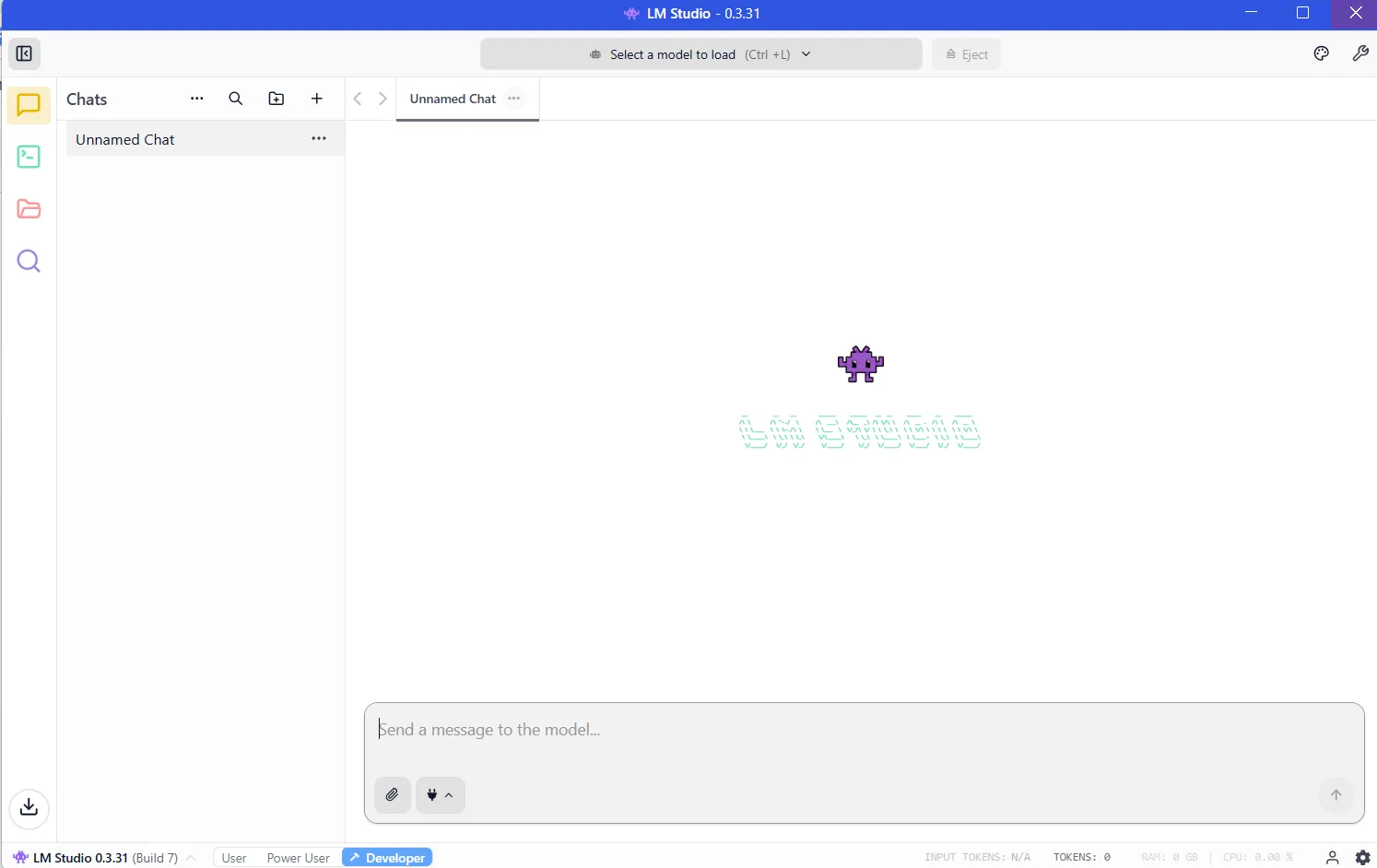

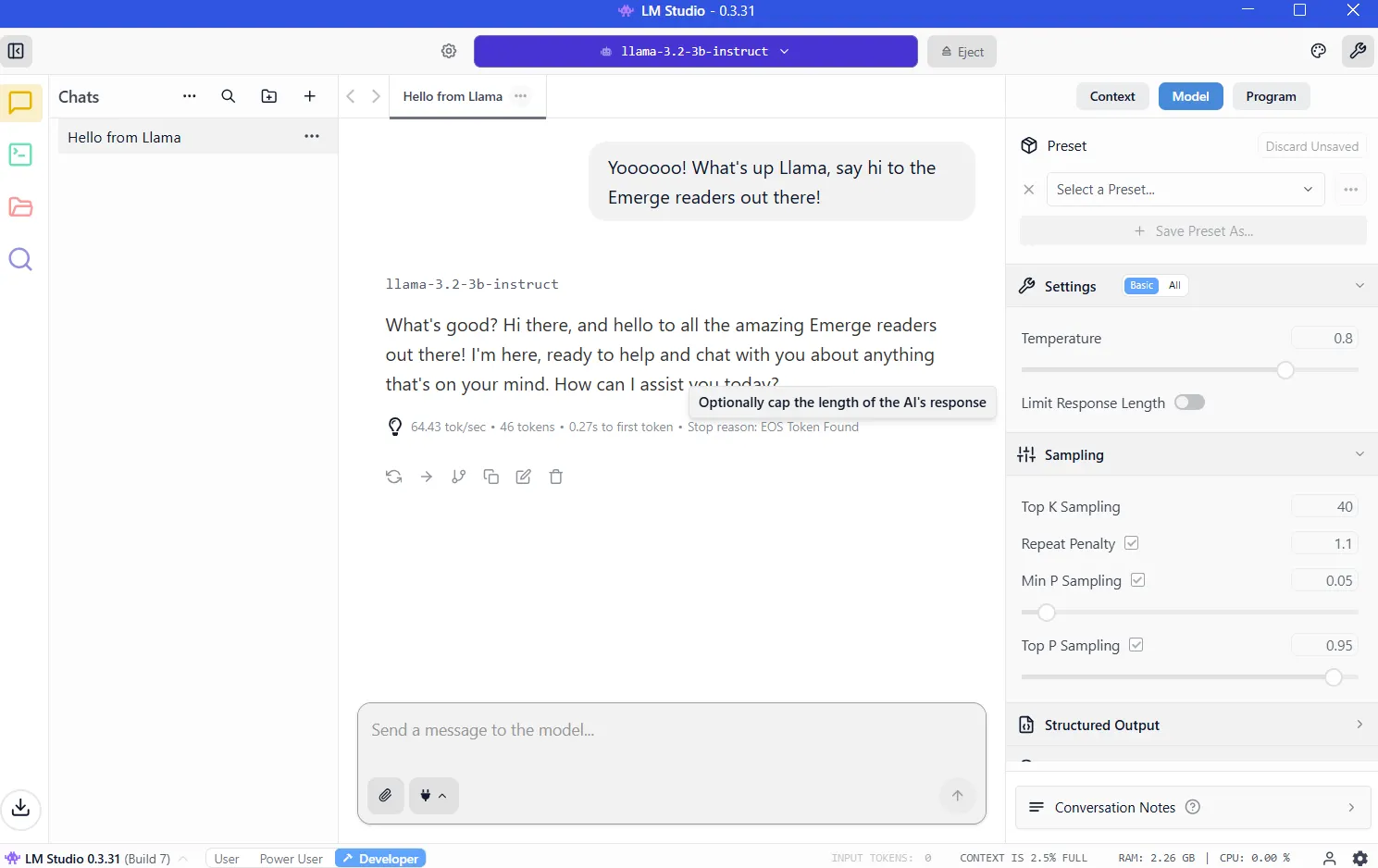

After getting put in LM Studio, this system can be able to run and can appear like this:

Now you’ll want to obtain a mannequin earlier than your LLM will work. And the extra highly effective the mannequin, the extra sources it can require.

The important useful resource is VRAM, or video reminiscence in your graphics card. LLMs load into VRAM throughout inference. If you do not have sufficient area, then efficiency collapses and the system should resort to slower system RAM. You will wish to keep away from that by having sufficient VRAM for the mannequin you wish to run.

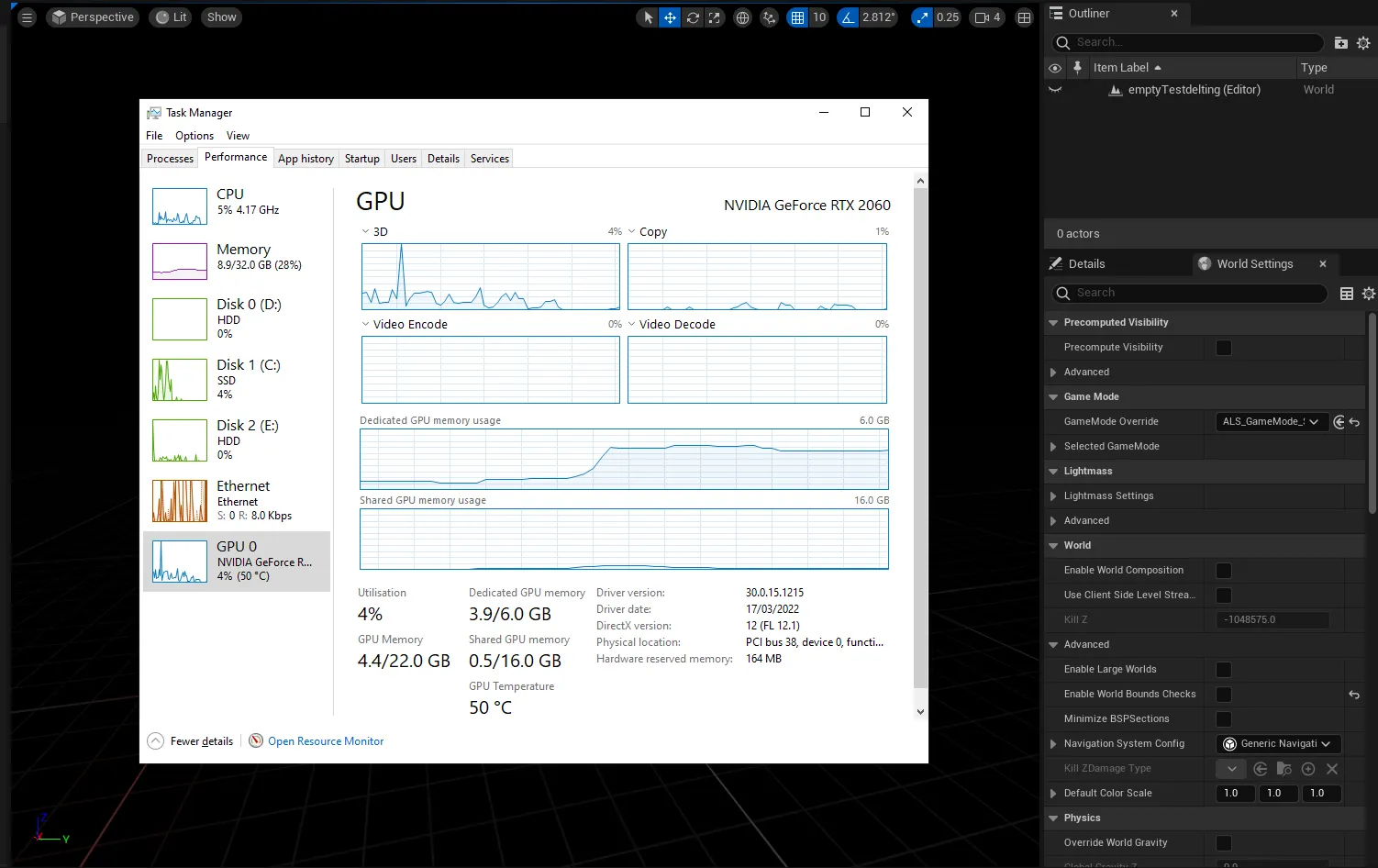

To know the way a lot VRAM you could have, you’ll be able to enter the Home windows activity supervisor (management+alt+del) and click on on the GPU tab, ensuring you could have chosen the devoted graphics card and never the built-in graphics in your Intel/AMD processor.

You will notice how a lot VRAM you could have within the “Devoted GPU reminiscence” part.

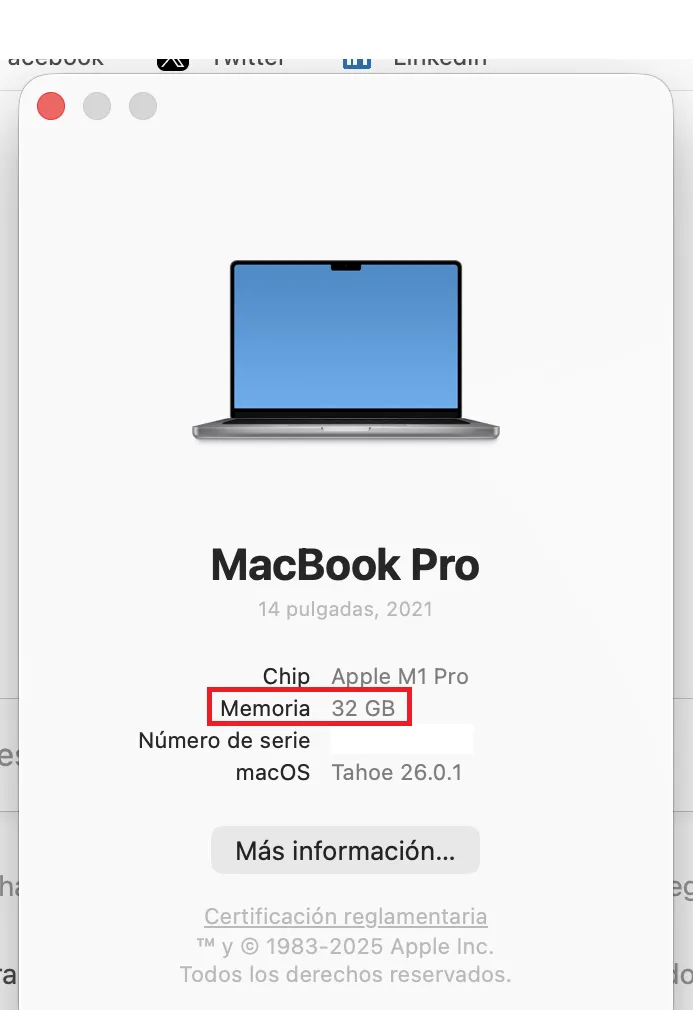

On M-Sequence Macs, issues are simpler since they share RAM and VRAM. The quantity of RAM in your machine will equal the VRAM you’ll be able to entry.

To verify, click on on the Apple brand, then click on on “About.” See Reminiscence? That is how a lot VRAM you could have.

You’ll need at the least 8GB of VRAM. Fashions within the 7-9 billion parameter vary, compressed utilizing 4-bit quantization, match comfortably whereas delivering robust efficiency. You’ll know if a mannequin is quantized as a result of builders normally disclose it within the title. Should you see BF, FP or GGUF within the title, then you’re looking at a quantized mannequin. The decrease the quantity (FP32, FP16, FP8, FP4), the less sources it can eat.

It’s not apples to apples, however think about quantization because the decision of your display. You will notice the identical picture in 8K, 4K, 1080p, or 720p. It is possible for you to to know the whole lot regardless of the decision, however zooming in and being choosy on the particulars will reveal {that a} 4K picture has extra data {that a} 720p, however would require extra reminiscence and sources to render.

However ideally, if you’re actually critical, then you can purchase a pleasant gaming GPU with 24GB of VRAM. It doesn’t matter whether it is new or not, and it doesn’t matter how briskly or highly effective it’s. Within the land of AI, VRAM is king.

As soon as you understand how a lot VRAM you’ll be able to faucet, then you’ll be able to determine which fashions you’ll be able to run by going to the VRAM Calculator. Or, merely begin with smaller fashions of lower than 4 billion parameters after which step as much as larger ones till your pc tells you that you simply don’t have sufficient reminiscence. (Extra on this system in a bit.)

Downloading your fashions

As soon as your {hardware}’s limits, then it is time to obtain a mannequin. Click on on the magnifying glass icon on the left sidebar and seek for the mannequin by title.

Qwen and DeepSeek are good fashions to make use of to start your journey. Sure, they’re Chinese language, however if you’re fearful about being spied on, then you’ll be able to relaxation simple. Whenever you run your LLM domestically, nothing leaves your machine, so that you received’t be spied on by both the Chinese language, the U.S. authorities, or any company entities.

As for viruses, the whole lot we’re recommending comes through Hugging Face, the place software program is immediately checked for spy ware and different malware. However for what it is price, the most effective American mannequin is Meta’s Llama, so it’s possible you’ll wish to choose that if you’re a patriot. (We provide different suggestions within the closing part.)

Word that fashions do behave in a different way relying on the coaching dataset and the fine-tuning strategies used to construct them. Elon Musk’s Grok however, there isn’t a such a factor as an unbiased mannequin as a result of there isn’t a such factor as unbiased data. So choose your poison relying on how a lot you care about geopolitics.

For now, obtain each the 3B (smaller much less succesful mannequin) and 7B variations. Should you can run the 7B, then delete the 3B (and check out downloading and operating the 13B model and so forth). Should you can’t run the 7B model, then delete it and use the 3B model.

As soon as downloaded, load the mannequin from the My Fashions part. The chat interface seems. Kind a message. The mannequin responds. Congratulations: You are operating an area AI.

Giving your mannequin web entry

Out of the field, native fashions cannot browse the net. They’re remoted by design, so you’ll iterate with them primarily based on their inside information. They’ll work high-quality for writing brief tales, answering questions, performing some coding, and so forth. However they received’t provide the newest information, let you know the climate, verify your e-mail, or schedule conferences for you.

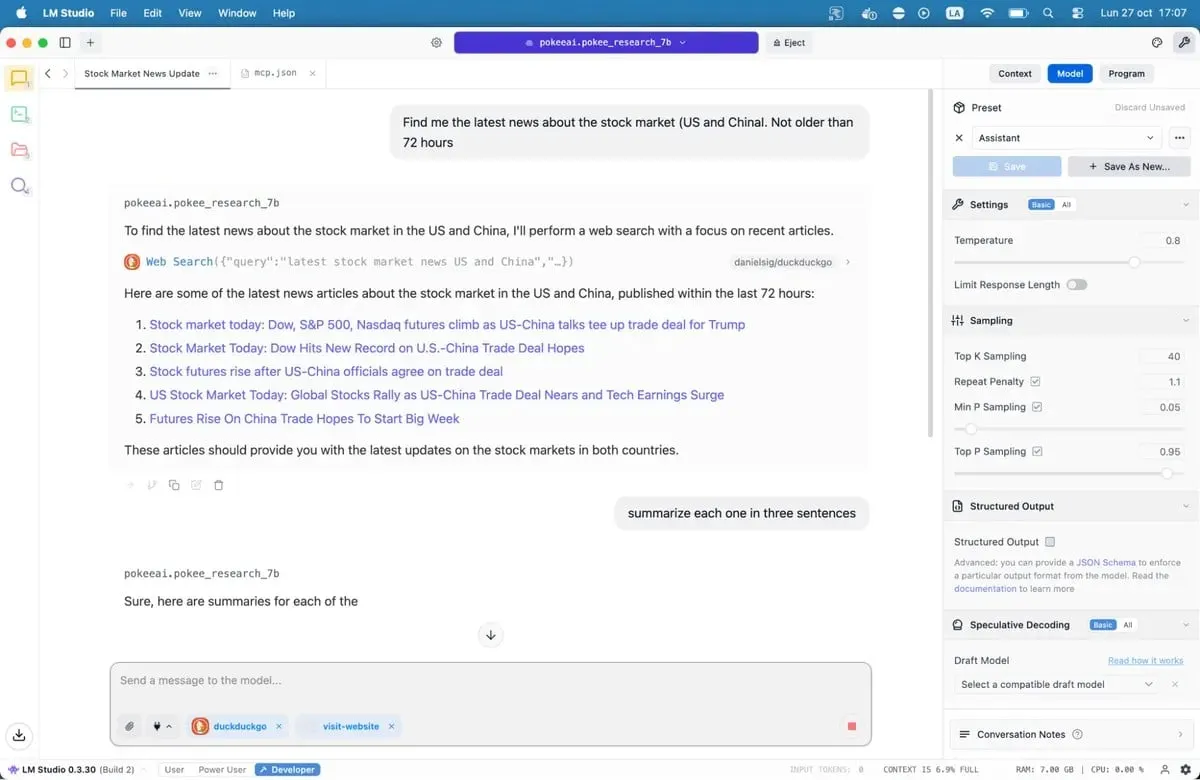

Mannequin Context Protocol servers change this.

MCP servers act as bridges between your mannequin and exterior companies. Need your AI to look Google, verify GitHub repositories, or learn web sites? MCP servers make it potential. LM Studio added MCP help in model 0.3.17, accessible via the Program tab. Every server exposes particular instruments—internet search, file entry, API calls.

If you wish to give fashions entry to the web, then our full information to MCP servers walks via the setup course of, together with fashionable choices like internet search and database entry.

Save the file and LM Studio will routinely load the servers. Whenever you chat together with your mannequin, it may possibly now name these instruments to fetch reside information. Your native AI simply gained superpowers.

Our really helpful fashions for 8GB programs

There are actually a whole bunch of LLMs accessible for you, from jack-of-all-trades choices to fine-tuned fashions designed for specialised use instances like coding, drugs, position play or inventive writing.

Greatest for coding: Nemotron or DeepSeek are good. They received’t blow your thoughts, however will work high-quality with code technology and debugging, outperforming most options in programming benchmarks. DeepSeek-Coder-V2 6.7B gives one other stable choice, notably for multilingual improvement.

Greatest for normal information and reasoning: Qwen3 8B. The mannequin has robust mathematical capabilities and handles advanced queries successfully. Its context window accommodates longer paperwork with out dropping coherence.

Greatest for inventive writing: DeepSeek R1 variants, however you want some heavy immediate engineering. There are additionally uncensored fine-tunes just like the “abliterated-uncensored-NEO-Imatrix” model of OpenAI’s GPT-OSS, which is sweet for horror; or Soiled-Muse-Author, which is sweet for erotica (so they are saying).

Greatest for chatbots, role-playing, interactive fiction, customer support: Mistral 7B (particularly Undi95 DPO Mistral 7B) and Llama variants with massive context home windows. MythoMax L2 13B maintains character traits throughout lengthy conversations and adapts tone naturally. For different NSFW role-play, there are a lot of choices. Chances are you’ll wish to verify a few of the fashions on this listing.

For MCP: Jan-v1-4b and Pokee Analysis 7b are good fashions if you wish to attempt one thing new. DeepSeek R1 is one other good choice.

All the fashions will be downloaded immediately from LM Studio in case you simply seek for their names.

Word that the open-source LLM panorama is shifting quick. New fashions launch weekly, every claiming enhancements. You may verify them out in LM Studio, or flick through the completely different repositories on Hugging Face. Check choices out for your self. Dangerous suits change into apparent shortly, because of awkward phrasing, repetitive patterns, and factual errors. Good fashions really feel completely different. They cause. They shock you.

The know-how works. The software program is prepared. Your pc in all probability already has sufficient energy. All that is left is attempting it.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.