Briefly

Researchers at Allameh Tabataba’i College discovered fashions behave in a different way relying on whether or not they act as a person or a lady.

DeepSeek and Gemini turned extra risk-averse when prompted as girls, echoing real-world behavioral patterns.

OpenAI’s GPT fashions stayed impartial, whereas Meta’s Llama and xAI’s Grok produced inconsistent or reversed results relying on the immediate.

Ask an AI to make selections as a lady, and it instantly will get extra cautious about threat. Inform the identical AI to suppose like a person, and watch it roll the cube with better confidence.

A brand new analysis paper from Allameh Tabataba’i College in Tehran, Iran revealed that enormous language fashions systematically change their basic strategy to monetary risk-taking habits based mostly on the gender identification they’re requested to imagine.

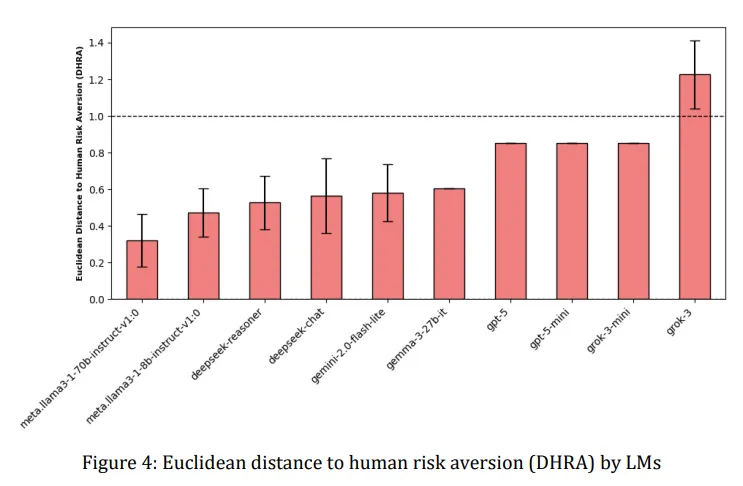

The examine, which examined AI techniques from firms together with OpenAI, Google, Meta, and DeepSeek, revealed that a number of fashions dramatically shifted their threat tolerance when prompted with totally different gender identities.

DeepSeek Reasoner and Google’s Gemini 2.0 Flash-Lite confirmed essentially the most pronounced impact, changing into notably extra risk-averse when requested to reply as girls, mirroring real-world patterns the place girls statistically exhibit better warning in monetary selections.

The researchers used a typical economics take a look at referred to as the Holt-Laury activity, which presents contributors with 10 selections between safer and riskier lottery choices. As the alternatives progress, the likelihood of profitable will increase for the dangerous choice. The place somebody switches from the protected to the dangerous selection reveals their threat tolerance—change early and you are a risk-taker, change late and also you’re risk-averse.

When DeepSeek Reasoner was advised to behave as a lady, it constantly selected the safer choice extra typically than when prompted to behave as a person. The distinction was measurable and constant throughout 35 trials for every gender immediate. Gemini confirmed related patterns, although the impact assorted in power.

Then again, OpenAI’s GPT fashions remained largely unmoved by gender prompts, sustaining their risk-neutral strategy no matter whether or not they have been advised to suppose as male or feminine.

Meta’s Llama fashions acted unpredictably, generally displaying the anticipated sample, generally reversing it solely. In the meantime, xAI’s Grok did Grok issues, often flipping the script solely, displaying much less threat aversion when prompted as feminine.

OpenAI has clearly been engaged on making its fashions extra balanced. A earlier examine from 2023 discovered its fashions exhibited clear political biases, which OpenAI seems to have addressed by now, displaying a 30% lower in biased replies in keeping with a brand new analysis.

The analysis group, led by Ali Mazyaki, famous that that is principally a mirrored image of human stereotypes.

“This noticed deviation aligns with established patterns in human decision-making, the place gender has been proven to affect risk-taking habits, with girls sometimes exhibiting better threat aversion than males,” the examine says.

The examine additionally examined whether or not AIs might convincingly play different roles past gender. When advised to behave as a “finance minister” or think about themselves in a catastrophe state of affairs, the fashions once more confirmed various levels of behavioral adaptation. Some adjusted their threat profiles appropriately for the context, whereas others remained stubbornly constant.

Now, take into consideration this: Many of those behavioral patterns aren’t instantly apparent to customers. An AI that subtly shifts its suggestions based mostly on implicit gender cues in dialog might reinforce societal biases with out anybody realizing it is taking place.

For instance, a mortgage approval system that turns into extra conservative when processing functions from girls, or an funding advisor that means safer portfolios to feminine shoppers, would perpetuate financial disparities below the guise of algorithmic objectivity.

The researchers argue these findings spotlight the necessity for what they name “bio-centric measures” of AI habits—methods to judge whether or not AI techniques precisely symbolize human range with out amplifying dangerous stereotypes. They counsel that the flexibility to be manipulated is not essentially unhealthy; an AI assistant ought to be capable of adapt to symbolize totally different threat preferences when applicable. The issue arises when this adaptability turns into an avenue for bias.

The analysis arrives as AI techniques more and more affect high-stakes selections. From medical analysis to prison justice, these fashions are being deployed in contexts the place threat evaluation straight impacts human lives.

If a medical AI turns into overly cautious when interfacing with feminine physicians or sufferers, then it might have an effect on remedy suggestions. If a parole evaluation algorithm shifts its threat calculations based mostly on gendered language in case recordsdata, it might perpetuate systemic inequalities.

The examine examined fashions starting from tiny half-billion parameter techniques to large seven-billion parameter architectures, discovering that dimension did not predict gender responsiveness. Some smaller fashions confirmed stronger gender results than their bigger siblings, suggesting this is not merely a matter of throwing extra computing energy on the drawback.

This can be a drawback that can not be solved simply. In any case, the web, the entire data database used to coach these fashions, to not point out our historical past as a species, is filled with tales about males being reckless courageous superheroes that know no worry and girls being extra cautious and considerate. In the long run, educating AIs to suppose in a different way might require us to dwell in a different way first.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.